What is deepfake? We talked about it with sound artist Halsey Burgund who directed with Francesca Panetta “In Event of Moon Disaster”: an art/education project produced by the MIT Center for Advanced Virtuality to help people understand this phenomenon (and misinformation) a bit better.

Leggi questo articolo in italiano

Leggi questo articolo in italiano

So, what if I told you that the actor who played Joker was Jim Carrey? No, seriously, it is! …Joaquin who, you say? I think you’re wrong, there, I’m quite sure it was Jim Carrey and I even have proofs:

See?

But I have proofs of other amazing things. Like… Home Alone was certainly starring Sylvester Stallone (here). And there is soon going to be a remake of Back to the future starring Tom Holland and Robert Downey Jr. Here the first clips available.

So, before you get your pants in a twist: yes, I was lying. Very much so (even if I’m still rooting for a new movie with Tom and Robert together). What I presented to you are just very popular examples of the phenomenon called deepfake, which has made the headlines (literally…) in the past two years.

You have all seen videos of Nicolas Cage where Nicolas Cage was not supposed to be (no, I’m not talking of his whole filmography, thank you very much).

You have heard about videos where Obama or Trump say things they never actually proclaimed… (and, once again, no: Trump saying you possibly need to inject disinfectant to fight Covid19 is – sadly – a real one).

You saw Jon Snow feeling sorry about season 8. And if you have not, go see him here, it’s priceless:

This kind of AI-generated fake videos (and audios) started in Academics and research, but the scientific origin soon left space to other uses:

Fake videos can now be created using a machine learning technique called a “generative adversarial network”, or a GAN. A graduate student, Ian Goodfellow, invented GANs in 2014 as a way to algorithmically generate new types of data out of existing data sets. […] The use of this machine learning technique was mostly limited to the AI research community until late 2017, when a Reddit user who went by the moniker “Deepfakes” – a portmanteau of “deep learning” and “fake” – started posting digitally altered pornographic videos. He was building GANs using TensorFlow, Google’s free open source machine learning software, to superimpose celebrities’ faces on the bodies of women in pornographic movies.

Oscar Schwartz, You thought fake news was bad? Deep fakes are where truth goes to die, in The Guardian, 12 November 2018

And if before reading this quote you were all thinking about the endless possibilities for fans (i.e. putting the faces of Dean Winchester and Castiel on any scene of Brokeback Mountain – we are innocent fans and we know where our priorities lie), after this quote your mind has probably run to the real problem of deepfake.

The fact that, with the right amount of technological knowledge and evilness of mind (technical term), you could actually fake any single important news or situation out there, and possibly make people believe everything you wanted about anyone you wanted.

Nothing new, there. There’s been such a huge talk of fake news recently, that we are becoming familiar with the concept.

What was relatively new to me was the expression “reality apathy”, a dangerous (and disquieting) effect of the current situation:

[the reality apathy happens when] Beset by a torrent of constant misinformation, people simply start to give up. Ovadya is quick to remind us that this is common in areas where information is poor and thus assumed to be incorrect. The big difference, Ovadya notes, is the adoption of apathy to a developed society like ours. The outcome, he fears, is not good. “People stop paying attention to news and that fundamental level of informedness required for functional democracy becomes unstable.

Charlie Warzel, He Predicted The 2016 Fake News Crisis. Now He’s Worried About An Information Apocalypse, in a Buzzfeed News article about Aviv Ovadya, founder of the Thoughtful Technology Project

And indeed, right the other day at fanheart3 we were discussing reactions to Covid19 among the people we knew, and more than one of us mentioned cases of distant – and not so distant – relatives or friends who are still denying what we all know is true, despite the death count.

However, while half of us (not a real percentage, just a way of speaking) is already going for the Thomas “I-need-to-experience-things” approach (“Except I shall see in his hands the print of the nails, and put my finger into the print of the nails, and thrust my hand into his side, I will not believe”), the other half is not even aware that manipulations like deepfake exist and that the ability to create them is actually more widespread than it might seem.

That is why, when we read about In Event of Moon Disaster, an immersive installation produced by the MIT Center for Advanced Virtuality and supported by Mozilla and the MIT Open Documentary Lab, we knew that we just had to find out more about it and about the reasons it was created.

In July 1969, much of the world celebrated “one giant leap for mankind.” Fifty years later, nothing is quite so straightforward.

The In Event of Moon Disaster project as presented in the official website

In Event of Moon Disaster illustrates the possibilities of deepfake technologies by reimagining this seminal event. What if the Apollo 11 mission had gone wrong and the astronauts had not been able to return home? A contingency speech for this possibility was prepared for, but never delivered by, President Nixon – until now.

We invite you into this alternative history and ask us all to consider how new technologies can bend, redirect and obfuscate the truth around us.

So, we contacted Halsey Burgund, director of the experience together with Francesca Panetta. Elena and me had the chance to spend an amazing hour on Zoom with him, talking about fake news and misinformation, technologies and audiences, and – obviously – of the amazing work that led these brilliant people to create an installation that could really be mind-changing. A work that will open the eyes of many, many people out there to the dangers we might face but also to the amazing possibilities that an ethical use of certain tools could actually open up for all of us.

Fans especially, if I may say so.

Author note: this interview will be cross-published on XRMust – About Immersive Storytelling (link available soon)

In Event of Moon Disaster and deepfake: the interview to Halsey Burgund

Agnese: So, I watched the video you sent me (a/n fake Nixon reading the speech) and I was shocked. I knew it was a fake but, I’ll admit it, for a moment I could only gape at it and ask myself, did I miss something? Is this… real? I will ask you about how you created it, but before everything else: how did this project started?

About a year ago we came up with the idea behind “In Event of Moon Disaster”. A bunch of us were in a room together: myself, Francesca (ed Panetta, co-director of the piece) and a few other journalists who were interested in technology and how it can be used in different ways.

We were brainstorming about different ideas and possibilities when deepfakes came to our mind. It was something we were aware of, and there were concerns about it and about the fact that almost nothing was done to control its use. Then the 50th anniversary of the moon landing came up – and, I am a huge fan of space exploration and of everything it represents. It was at that point that we remembered the contingency speech written for President Nixon (to be delivered in case the mission failed and the astronauts died on the moon). And it hit us: Let’s make President Nixon actually deliver this speech and build an alternate history to show the power that deepfakes bring to the misinformation ecosystem.

The contingency speech is such a beautiful one, wonderfully written, and it talks not only about individual astronauts and their sacrifice, but expands to the idea of exploration and mankind… how we will not be deterred. A hopeful message that rang true with us in a lot of ways. Obviously if this speech had happened in real life, then it would have been a major tragedy, but it did not. We all know things did not go that way. So, highlighting that specific speech does not only reaffirm that it did not happen, but it also gives you the chance to emphasize our desire to explore, a desire that characterizes mankind. We thought the speech would be an effective vehicle for us to communicate the dangers of misinformation through both aesthetic experience and more direct pedagogy.

Elena: the scariest things for me are news that don’t require any particular technology or technical knowledge to be faked. In Event of Moon Disaster requires certain skills, but today anybody can go in a website and say anything they want. A great thing for freedom of speech, a bad thing for accuracy. For example, we all know that social media have algorithms that tend to validate your opinions so you don’t even get to see different ones. Much has been done at this regard, but I wonder, what is the future of information and misinformation in your opinion?

This is a big questions. Social media are built on sharing but what we usually tend to share is inherently more extreme. Also, we often share things too quickly, without thinking them over. Maybe, if you had time to think about that same post, go back to it an hour later after you have calmed down a bit, you would not share it or you would do it in a different way.

I am not anti-social media even if I don’t use them often: I think great things can come from them, but they can also be very damaging. However, solutions are not easy nor obvious. I won’t pretend that I have them. What we are doing is just trying to pull the curtain back a little bit on some of this to get people to think about it and then judge for themselves.

Deepfakes and synthetic media can be used for good just as much as for bad. But the big question they leave you with, at the end of the day, is the same: are we not going to be able to ever know what the truth is, again? Are there always going to be some shadows of doubt over absolutely everything?

On reality apathy

Misinformation is not new. As soon as there was information, as soon as there was data to report on, there was a way to make those data seem different. Photoshop, for example, has been around for decades and it allows you to use real photos manipulated to convey something non-factual. All these cheap fakes – reversing videos, slowing down videos, wrong timings, and so on – we used them in our film: we twisted archive footage around, creating something that presents major inaccuracies on purpose. This to show that our project is not only about deepfakes, but it is also about easier ways to spread misinformation, ways that don’t require massive technology. You slow down a video of Nancy Pelosi, you make it sound like she is drunk and therefore she cannot be trusted. We want to shed a light on this phenomenon and its implications and so we put together a team made up of different minds – media researchers, historians, journalists, technical experts, and so on – as a way to tackle all this from different point of views: from a social perspective, from a philosophical perspective, the point of view of those interested in technological progress and so on.

Deepfakes and synthetic media can be used for good just as much as for bad. But the big question they leave you with, at the end of the day, is the same: are we not going to be able to ever know what the truth is, again? Are there always going to be some shadows of doubt over absolutely everything? Are people just going to give up and become apathetic and not except anything as true other than what they have personally experienced?

I have to say that there is a dissonance with Francesca and myself: we wanted to make the piece as good as we possibly could, but in so doing we increased our own anxiety and fear about the future at the same time. It’s one of the many challenges that everybody goes through when they’re creating something new.

Agnese: what about the creation of In Event of Moon Disaster? Was it difficult to realize and how long did it take your team to finish it?

Creating In Event of Moon Disaster took a lot of effort. Of course, the deepfake was a big part of it, but not the whole part. The rest of the film took a lot of time with editing and, as always, we did not have all the funding we would have liked to have. As for the deepfake itself, it took about four months to complete.

We worked with two companies: Canny AI for the visual elements, and Respeecher for the synthetic speech. We really wanted to do the best possible job with the available technology, because we felt that part of the piece was to give a demonstration of the best technology that was out there at the time. I say “at the time” because things have gotten better since. And indeed, it has become clear to us that the amount of effort it took us to create something of that quality – whether you think it’s a high or a low quality – will be reduced on a monthly basis. As we move forward, new technologies will be available, and they will make the production of such things easier and easier for larger groups of people. Democratization of technology at a high level is a good thing, but when it comes to technologies like these, it just opens them up for more manipulative intentions.

An anecdote from our actual experience: when we started working with Canny AI on the visual elements, they gave us explicit instructions as to what they needed from us and told us that the target video (the video of Nixon that we wanted to manipulate) had to have certain characteristics. It basically needed to be a still shot of Nixon talking. No close ups, and motion. This, to make the result more convincing. So, we found a different video from the one we were originally thinking of usingwhere the scene started at desk size and then zoomed in on his face.

But then, a month later, the company tried to apply modifications to the video we originally wanted to use… and it worked perfectly! This made it possible for us to add a more dynamic element to the speech itself and therefore to make it more believable.

In other words, just over the course of a month or two, that technology that Canny AI developed improved significantly. And, since then, the technology has got much, much better… and much, much easier to use! I’m quite convinced that if we were to do In Event of Moon Disaster today, we would have results not only more quickly, but also that looked even more convincing!

The one thing it would not change is the fact that the source material that we have is 50 years old and that creates lots of challenges. Take the audio of President Nixon: there is plenty of recordings of him, but it’s all done in random different situations, with different microphones and settings and that is very problematic and makes our work really challenging. There are advances in noise reduction, and advances in frequency analysis, but I think that that technology has not improved quite as dramatically as some of the deepfake production stuff has.

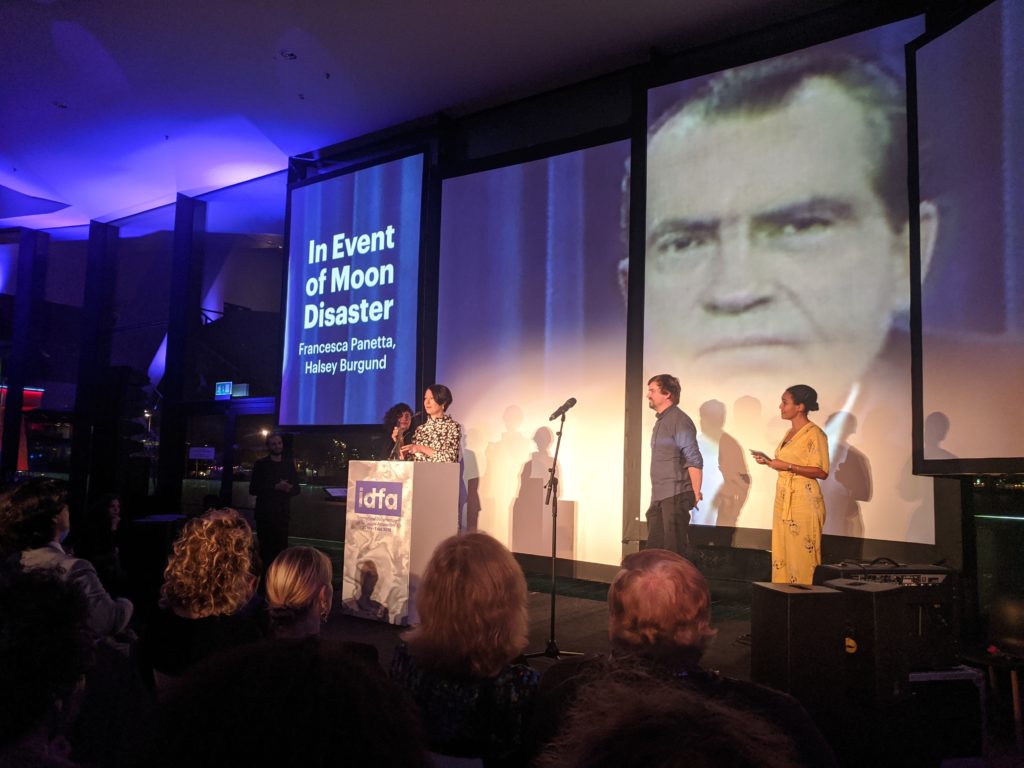

Agnese: you know, I think that a lot of people are not even aware that something like this can be created and that it can look that believable. I know you presented In Event of Moon Disaster at IDFA, the International Documentary FilmFestival Amsterdam. I’m curious: what audience reactions did you notice? Were they surprised by what they were experiencing?

We were surprised by how our audience at IDFA reacted! As artists, we really wanted to encapsulate our installation in a way that felt immersive and that did not feel like we wanted to impose education on people. We wanted them to have an experience: they walk into this room, they sit down on the couch, they find leaflets with clever advertisements about deepfake: “Tired of history telling you what happened? Try Deepfake!”… Everything to convey the idea that what you were experiencing was realistic but not actually real. And yet, many people were confused by the video and by the whole experience. The fact that it was a deepfake was not crystal clear to everybody. Some people thought the video of Nixon was real but it had never aired, some that we wrote the speech (and actually congratulated us for it!), others did not even know who President Nixon was. All things we had not considered and that we tried to explain to our audience after the experience itself. So, most of these people still got the message after we talked to them, but how many folks did walk away without talking to us and therefore without getting the message we were trying to convey? A big lesson for us, for when we are thinking about future presentations.

Our piece was supposed to be at Tribeca last week and we were thinking a lot about how to manage some of those onboarding / offboarding stuff. We’re still sticking with the idea of encapsulating the aesthetic experience and then pulling back the curtain a bit, in particular through the forthcoming website, and say “Ok, that was not real, despite what you felt emotionally when you experienced it. This is how we made it, this is what a deepfake is”. We hope to have the opportunity to do exactly that in future installations.

This is important information that we need to talk about, but talking about it in the context of an actual work of art – which is also an immersive one – could have a much more significant impact than simply talking about it in the abstract.

On the educational relevance of In Event of Moon Disaster

Elena: earlier you mentioned the educational value of In Event of Moon Disaster. Do you want to share this piece in schools too?

We brought on to the team an education producer, Joshua Glick, who is working on a curriculum for college students in media literacy where this installation will be the infrastructure around which media literacy is taught. We are working actively on that because we do think that there is a lot to do and gain from taking it into a rigorous academic setting.

This is important information that we need to talk about, but talking about it in the context of an actual work of art – which is also an immersive one – could have a much more significant impact than simply talking about it in the abstract. That is why we want to work in this way with this film, and that is also why Scientific American is producing a short documentary about the piece. It is a documentary about deepfakes and how you create them, but it is done in the context of our piece; how we made it, what the challenges were etc. Something that, hopefully, can make In Event of Moon Disaster even more impactful!

In Event of Moon Disaster is a production of the MIT Center for Advanced Virtuality directed by Francesca Panetta and Halsey Burgund and is supported by Mozilla and the MIT Open Documentary Lab.

We’d like to thank Halsey for the time spent explaining to us his work and for the passion he conveyed in every minute of our conversation! We also thank the whole team for creating something that not only thrilled us but opened us to a world that, for the most part, was still unknown to us.

Keep visiting In Event of Moon Disaster website to know more about deepfake and to follow their important educational project! And remember, be careful of what you read online: if they tell you I’m dating Russell Crowe there’s a 90% possibility that it’s fake news and an 80% possibility that I was the one spreading it (still, that 10% gives me hope).